0 Comments

0 Shares

74 Views

Directory

Elevate your Sngine platform to new levels with plugins from YubNub Digital Media!

-

Please log in to like, share and comment!

-

WWW.MASHED.COMWhat Happened To Foam Coolers After Shark Tank?Foam Coolers made a splash on "Shark Tank" and walked away with a sizable deal. Here's what happened after the show ended and where the company is at now.0 Comments 0 Shares 72 Views

WWW.MASHED.COMWhat Happened To Foam Coolers After Shark Tank?Foam Coolers made a splash on "Shark Tank" and walked away with a sizable deal. Here's what happened after the show ended and where the company is at now.0 Comments 0 Shares 72 Views -

WWW.MASHED.COMMcDonald's Hot Honey Menu Review: Sweet-Toothed Heatseekers Will Be Lovin' ItMcDonald's is following the hot honey trend by launching menu items featuring the sweet and spicy sauce. Find out if the new items were a love at first bite.0 Comments 0 Shares 74 Views

WWW.MASHED.COMMcDonald's Hot Honey Menu Review: Sweet-Toothed Heatseekers Will Be Lovin' ItMcDonald's is following the hot honey trend by launching menu items featuring the sweet and spicy sauce. Find out if the new items were a love at first bite.0 Comments 0 Shares 74 Views -

WWW.MASHED.COMHow Costco Solved The Most Frustrating Thing About Its Chicken BreastsCostco's Kirkland Signature fresh boneless, skinless chicken breasts have been a cause for concern for shoppers for a long time, but the issue may be solved.0 Comments 0 Shares 72 Views

WWW.MASHED.COMHow Costco Solved The Most Frustrating Thing About Its Chicken BreastsCostco's Kirkland Signature fresh boneless, skinless chicken breasts have been a cause for concern for shoppers for a long time, but the issue may be solved.0 Comments 0 Shares 72 Views -

WWW.MASHED.COMThis Quirky Vintage Sandwich From The 1900s Is A Must-Try For Popcorn LoversPopcorn is a tasty treat often eaten by the handful - but this one vintage sandwich gives your favorite movie theater treat a whole new flavor profile.0 Comments 0 Shares 74 Views

WWW.MASHED.COMThis Quirky Vintage Sandwich From The 1900s Is A Must-Try For Popcorn LoversPopcorn is a tasty treat often eaten by the handful - but this one vintage sandwich gives your favorite movie theater treat a whole new flavor profile.0 Comments 0 Shares 74 Views -

WWW.MASHED.COMThe Ridiculous Amount Of Nuggets McDonald's Sells Every YearMcDonald's has been around for decades, and its popularity is through the roof. Here's the genuinely ridiculous number of McNuggets the chain sells yearly.0 Comments 0 Shares 72 Views

WWW.MASHED.COMThe Ridiculous Amount Of Nuggets McDonald's Sells Every YearMcDonald's has been around for decades, and its popularity is through the roof. Here's the genuinely ridiculous number of McNuggets the chain sells yearly.0 Comments 0 Shares 72 Views -

WWW.MASHED.COM12 Regional Brands Of Potato Chips We Wish Were NationwideHere's a list of the top regional brands of potato chips we wish were available throughout the U.S. But, these brands are rare, and are quickly disappearing.0 Comments 0 Shares 72 Views

WWW.MASHED.COM12 Regional Brands Of Potato Chips We Wish Were NationwideHere's a list of the top regional brands of potato chips we wish were available throughout the U.S. But, these brands are rare, and are quickly disappearing.0 Comments 0 Shares 72 Views -

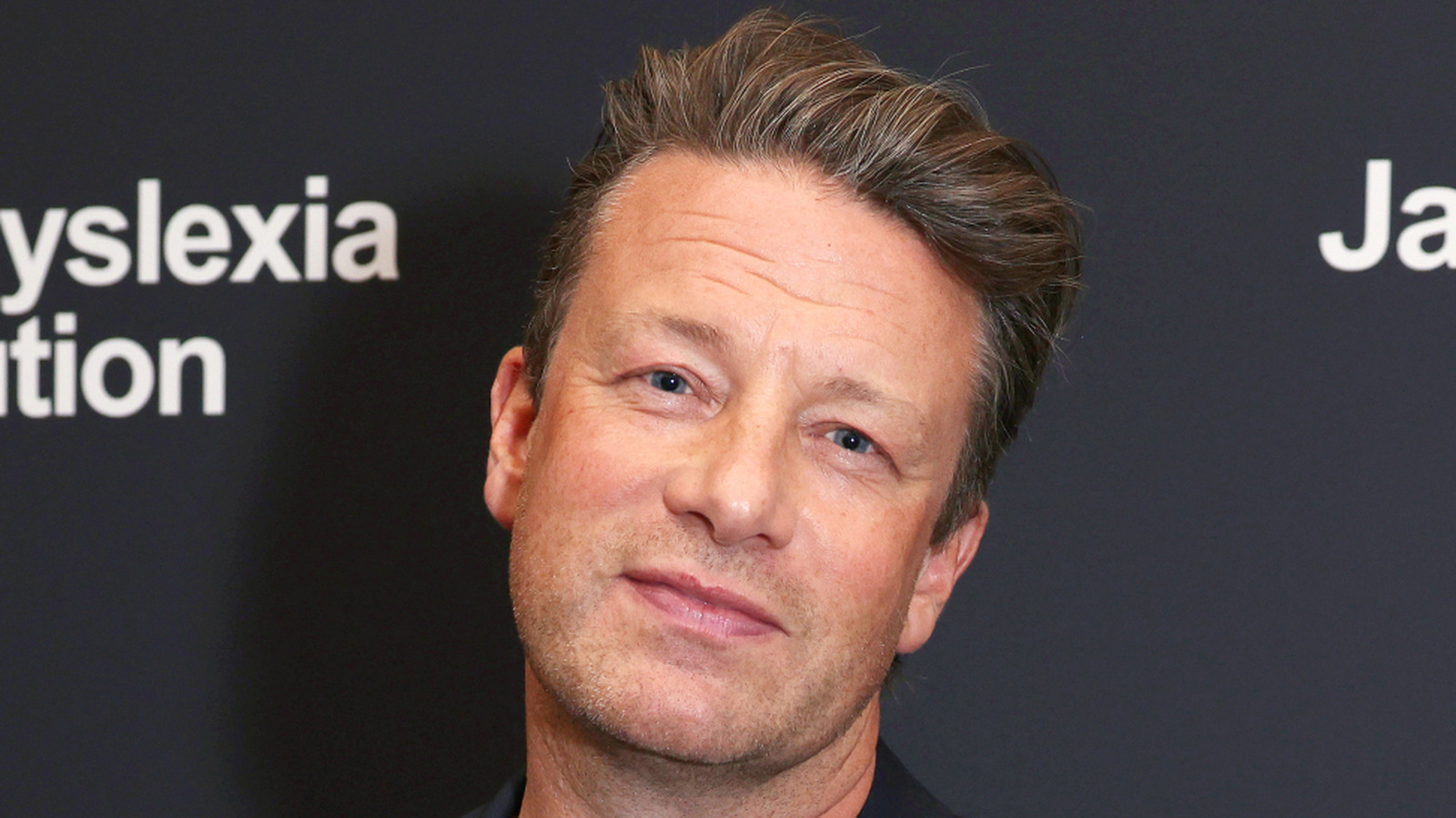

WWW.MASHED.COMJamie Oliver's Goto Pepper For Adding Flavor To A DishJamie Oliver knows how to cook and season some great meals. Along with standard seasonings, he use a particular pepper to boost the flavor of a dish.0 Comments 0 Shares 73 Views

WWW.MASHED.COMJamie Oliver's Goto Pepper For Adding Flavor To A DishJamie Oliver knows how to cook and season some great meals. Along with standard seasonings, he use a particular pepper to boost the flavor of a dish.0 Comments 0 Shares 73 Views -

WWW.MASHED.COMHow Is Texas Roadhouse Changing In 2026?Texas Roadhouse is one of the biggest sit-down chains in the U.S., but it's not resting on popularity alone. The restaurant has some changes planned for 2026.0 Comments 0 Shares 74 Views

WWW.MASHED.COMHow Is Texas Roadhouse Changing In 2026?Texas Roadhouse is one of the biggest sit-down chains in the U.S., but it's not resting on popularity alone. The restaurant has some changes planned for 2026.0 Comments 0 Shares 74 Views -

WWW.MASHED.COMWhy This Fancy Kitchen Tech Upgrade Isn't Worth The HypeThere are many different ways to upgrade your kitchen with new tech, but some are more worthwhile than others. Here's one not worth the hype.0 Comments 0 Shares 74 Views

WWW.MASHED.COMWhy This Fancy Kitchen Tech Upgrade Isn't Worth The HypeThere are many different ways to upgrade your kitchen with new tech, but some are more worthwhile than others. Here's one not worth the hype.0 Comments 0 Shares 74 Views