0 Kommentare

0 Geteilt

9 Ansichten

Verzeichnis

Elevate your Sngine platform to new levels with plugins from YubNub Digital Media!

-

Bitte loggen Sie sich ein, um liken, teilen und zu kommentieren!

-

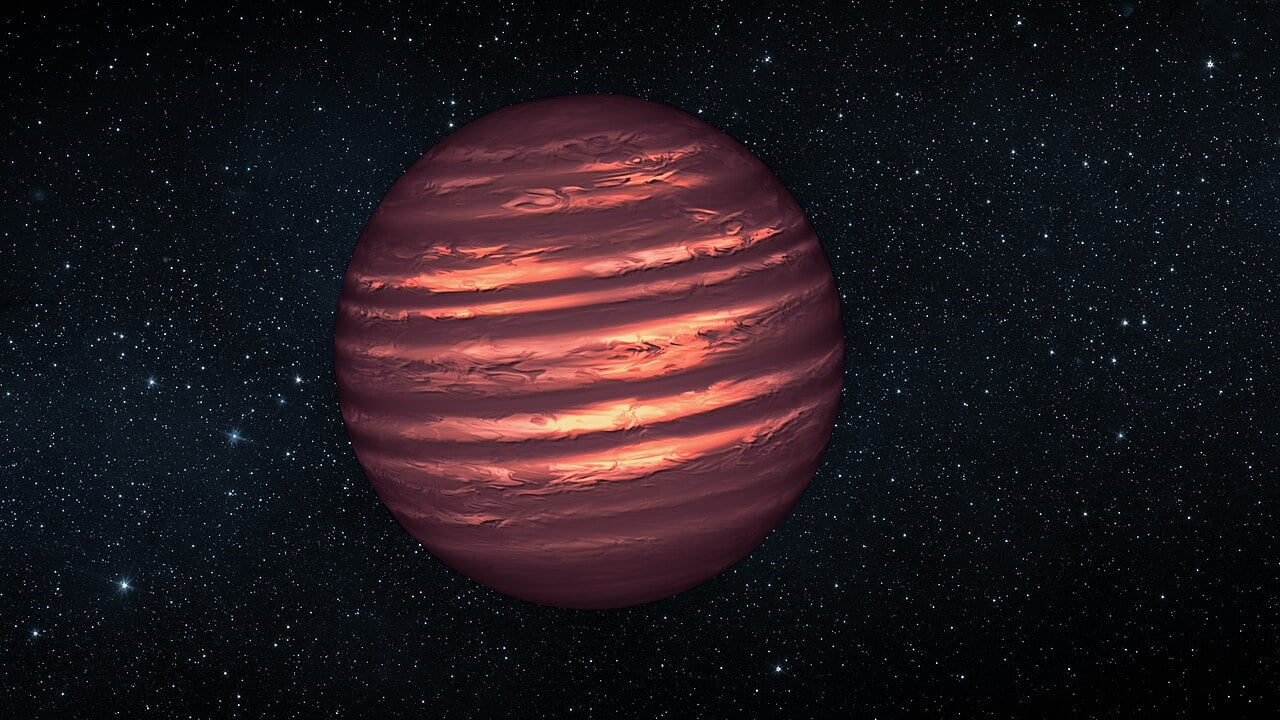

WWW.UNIVERSETODAY.COMThe Mystery of the Fading StarAstronomers have solved the mystery of a star that dimmed dramatically for nearly 200 days, one of the longest stellar dimming events ever recorded. The culprit appears to be either a brown dwarf or a super Jupiter with an enormous ring system, creating a giant saucer like structure that blocked 97% of the stars light as it passed in front. This rare alignment offers scientists a unique opportunity to study planetary scale ring systems far beyond our Solar System.0 Kommentare 0 Geteilt 5 Ansichten

WWW.UNIVERSETODAY.COMThe Mystery of the Fading StarAstronomers have solved the mystery of a star that dimmed dramatically for nearly 200 days, one of the longest stellar dimming events ever recorded. The culprit appears to be either a brown dwarf or a super Jupiter with an enormous ring system, creating a giant saucer like structure that blocked 97% of the stars light as it passed in front. This rare alignment offers scientists a unique opportunity to study planetary scale ring systems far beyond our Solar System.0 Kommentare 0 Geteilt 5 Ansichten -

WWW.MASHED.COMWhy Choosing Wine Before Your Meal Can BackfireHere's why picking wine too early can affect your entire meal, from flavors to enjoyment, shaping your dining experience from beginning to end.0 Kommentare 0 Geteilt 5 Ansichten

WWW.MASHED.COMWhy Choosing Wine Before Your Meal Can BackfireHere's why picking wine too early can affect your entire meal, from flavors to enjoyment, shaping your dining experience from beginning to end.0 Kommentare 0 Geteilt 5 Ansichten -

WWW.GAMEBLOG.FRFan de Metal Gear Solid ? Ce nouveau jeu est pour vousLe prochain coup de cur des fans de la formule Metal Gear Solid (MGS) pourrait bien s'tre montr l'occasion de la premire confrence State of Play de 2026.0 Kommentare 0 Geteilt 6 Ansichten

WWW.GAMEBLOG.FRFan de Metal Gear Solid ? Ce nouveau jeu est pour vousLe prochain coup de cur des fans de la formule Metal Gear Solid (MGS) pourrait bien s'tre montr l'occasion de la premire confrence State of Play de 2026.0 Kommentare 0 Geteilt 6 Ansichten -

WWW.GAMEBLOG.FRNetflix accueille l'une des sries de science-fiction les plus cultes de tous les tempsNetflix ajoute aujourd'hui l'une des sries de science fiction les plus cultes de tous les temps, un vrai carton dans les annes 90 2000 qui aura marqu toute une gnration de fans.0 Kommentare 0 Geteilt 6 Ansichten

WWW.GAMEBLOG.FRNetflix accueille l'une des sries de science-fiction les plus cultes de tous les tempsNetflix ajoute aujourd'hui l'une des sries de science fiction les plus cultes de tous les temps, un vrai carton dans les annes 90 2000 qui aura marqu toute une gnration de fans.0 Kommentare 0 Geteilt 6 Ansichten -

The Chain Behind A Famous John Mellencamp Lyric Is Down To One LocationThe Chain Behind A Famous John Mellencamp Lyric Is Down To One Location...0 Kommentare 0 Geteilt 8 Ansichten

-

The next Where Winds Meet expansion opens the door to a whole new region, and the action game's most stylish weapon pairing yetThe next Where Winds Meet expansion opens the door to a whole new region, and the action game's most stylish weapon pairing yet Everstone Studios has just released a gorgeous preview trailer for the new Where Winds Meet expansion, which takes us to the much-anticipated Hexi region. This next chapter of the free-to-play stylish action game promises a "dreamlike journey" that will pull us...0 Kommentare 0 Geteilt 9 Ansichten

-

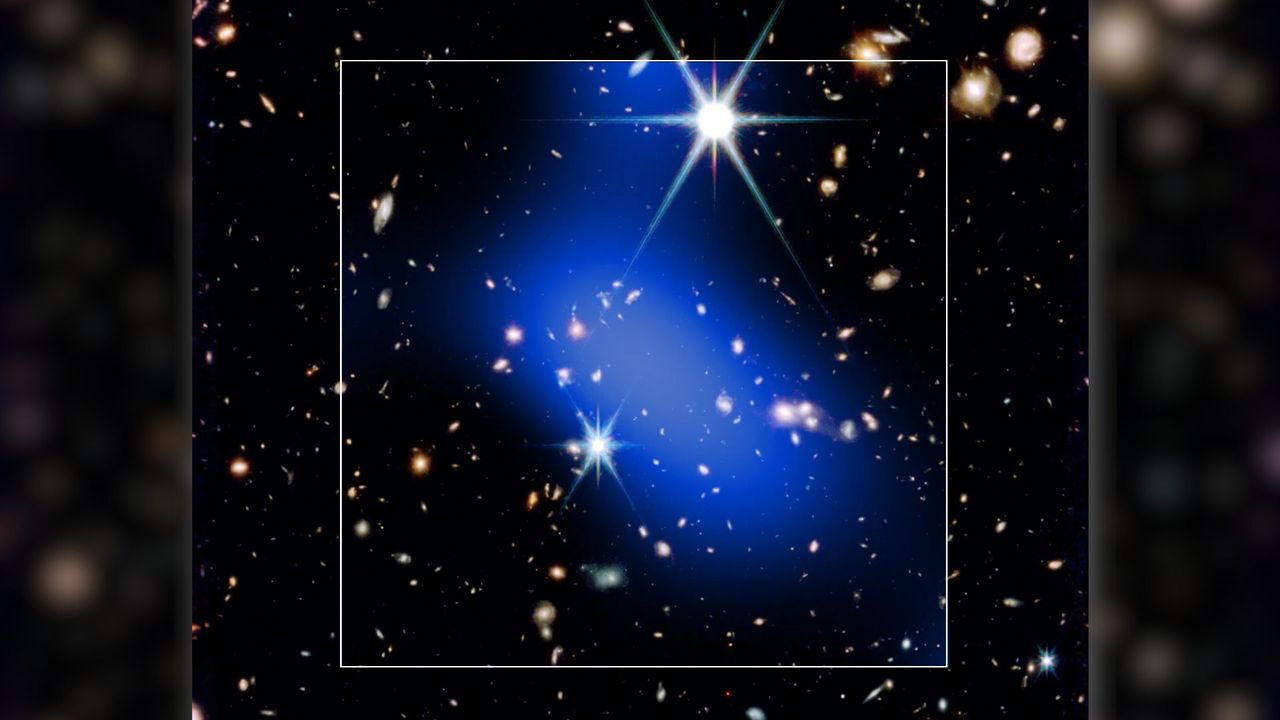

WWW.LIVESCIENCE.COMDeepest views from James Webb and Chandra telescopes reveal a monster object that defies theory Space photo of the weekThe James Webb Space Telescope and the Chandra X-ray Observatory have captured the clearest image yet of a galaxy cluster in the making, seen when the universe was only one billion years old.0 Kommentare 0 Geteilt 5 Ansichten

WWW.LIVESCIENCE.COMDeepest views from James Webb and Chandra telescopes reveal a monster object that defies theory Space photo of the weekThe James Webb Space Telescope and the Chandra X-ray Observatory have captured the clearest image yet of a galaxy cluster in the making, seen when the universe was only one billion years old.0 Kommentare 0 Geteilt 5 Ansichten -

WWW.UNIVERSETODAY.COMHunting Cosmic Ghosts from the Edge of SpaceAfter five years of development and a nail biting launch from Antarctica, the PUEO experiment has completed a 23 day balloon flight at the edge of space, hunting for some of the most energetic particles in the universe. The instrument flew at 120,000 feet above Antarctica, using the entire continent as a detector to search for ultra high energy neutrinos, elusive particles that could reveal secrets about the universes most violent events. Now safely back on the ice with 50-60 terabytes of data, scientists are preparing to search through the results to see if theyve caught these messengers from distant galaxies.0 Kommentare 0 Geteilt 5 Ansichten

WWW.UNIVERSETODAY.COMHunting Cosmic Ghosts from the Edge of SpaceAfter five years of development and a nail biting launch from Antarctica, the PUEO experiment has completed a 23 day balloon flight at the edge of space, hunting for some of the most energetic particles in the universe. The instrument flew at 120,000 feet above Antarctica, using the entire continent as a detector to search for ultra high energy neutrinos, elusive particles that could reveal secrets about the universes most violent events. Now safely back on the ice with 50-60 terabytes of data, scientists are preparing to search through the results to see if theyve caught these messengers from distant galaxies.0 Kommentare 0 Geteilt 5 Ansichten -

WWW.PCGAMESN.COMThe next Where Winds Meet expansion opens the door to a whole new region, and the action game's most stylish weapon pairing yetEverstone Studios has just released a gorgeous preview trailer for the new Where Winds Meet expansion, which takes us to the much-anticipated Hexi region. This next chapter of the free-to-play stylish action game promises a "dreamlike journey" that will pull us back in time "to witness the legends of heroes". Alongside it, we can expect the first of several new combat styles, which are looking particularly flashy, although I might be even more excited by the developer's newfound commitment to improving its lackluster localization.Read the full story on PCGamesN: The next Where Winds Meet expansion opens the door to a whole new region, and the action game's most stylish weapon pairing yet0 Kommentare 0 Geteilt 5 Ansichten

WWW.PCGAMESN.COMThe next Where Winds Meet expansion opens the door to a whole new region, and the action game's most stylish weapon pairing yetEverstone Studios has just released a gorgeous preview trailer for the new Where Winds Meet expansion, which takes us to the much-anticipated Hexi region. This next chapter of the free-to-play stylish action game promises a "dreamlike journey" that will pull us back in time "to witness the legends of heroes". Alongside it, we can expect the first of several new combat styles, which are looking particularly flashy, although I might be even more excited by the developer's newfound commitment to improving its lackluster localization.Read the full story on PCGamesN: The next Where Winds Meet expansion opens the door to a whole new region, and the action game's most stylish weapon pairing yet0 Kommentare 0 Geteilt 5 Ansichten