test jetbrains

-

1 people like this

-

78 Posts

-

Photos

-

Videos

-

Other

Recent Updates

-

The Rider 2025.2 Release Candidate Is Here!Rider 2025.2 is shaping up to be a big release especially if youre a game developer. This update introduces powerful new debugging features for Unity, Unreal Engine, and Godot, including source-level shader debugging, mixed-mode debugging for native and managed code, and stepping time improvements.Beyond game dev, Rider 2025.2 delivers a range of enhancements for performance, observability, and AI-powered productivity. With the new OpenTelemetry plugin, a reworked Monitoring tool window, and support for connecting external AI clients via MCP (Model Context Protocol), this release will help you build more performant and stable applications and enjoy the process, too.Download the RC and explore the changes: Download Rider 2025.2 RC Release highlightsReworked Monitoring tool with automatic issue detectionThe Monitoring tool window has been entirely reimagined to make performance optimization for .NET applications simpler and more insightful. Youll find new interactive charts, automatic issue detection on Windows, and seamless integration with Riders bundled profilers, dotTrace and dotMemory. With just a couple of clicks, you can navigate from a performance issue to the responsible method in the editor.OpenTelemetry plugin for in-IDE runtime observabilityRider now offers an OpenTelemetry plugin, bringing runtime metrics and logs directly into your IDE. You can visualize app behavior, generate architecture diagrams, and click through from logs to the exact source code all without leaving Rider or opening an external dashboard. Learn more about the new tool here.The OpenTelemetry plugin also integrates with MCP Servers, allowing AI tools to access logs, architecture diagrams, and other observability data.One-click MCP server setup to connect external AI clients to RiderStarting with version 2025.2, JetBrains IDEs come with an integrated MCP server, allowing external clients such as Claude Desktop, Cursor, VS Code, and others to access tools provided by the IDE. This provides users with the ability to control and interact with JetBrains IDEs without leaving their application of choice.For external clients like Claude Code, Claude Desktop, Cursor, VS Code, and Windsurf, configuration can be performed automatically. Go to Settings | Tools | MCP Server, click Enable MCP Server, and in the Clients Auto-Configuration section, click Auto-Configure for each client you want to set up for use with the MCP server. This will automatically update their JSON configuration. Restart your client for the configuration to take effect.Performance improvementsImproved memory consumptionWeve fine-tuned Riders garbage collection settings, reducing peak managed memory usage by GC up to 20% especially helpful when working on large codebases.Faster debugger steppingStepping through code is now snappier, even when working with complex watches like large Unity objects or LINQ expressions. Rider now cancels unnecessary evaluations earlier for a smoother debugging experience.Code analysis and language supportExtended C# 14 supportRider 2025.2 brings initial support for the latest additions in C# 14, as well as a range of other improvements to code analysis. Here are some highlights:Extension members: Initial support for code completion, usage search, and refactorings.Partial events and constructors.Null-conditional assignments using a?.b = c and a?[i] = c.User-defined compound assignment operators.Roslyn supportRoslyn support in this release includes enhanced CompletionProvider functionality, enabling richer context-aware code completion through analyzers and community packages. It also introduces Roslyn suppressors, which silently suppress diagnostics in the background to reduce noise and improve clarity in the editor.F# improvementsThe F# plugin brings better import suggestions, smarter code annotations, improved type inference, and fixes for interop issues and F# scripts.Debugger updatesNative debugging improvementsLow-level exception suppression: You will now be able to prevent Rider from breaking on framework-level assertions like int3, either globally or at runtime.Step filters: Skip over library functions by treating Step Into as Step Over for selected methods.Pause All Processes: Coordinate debugging across multiple processes with new actions to pause, resume, or stop them together.Remote native debugging: Attach to native processes on a remote machine via SSH. [Windows only]Game developmentUnityShader debugging: Debug ShaderLab code in Rider using the integrated Frame Viewer with full support for breakpoints, draw calls, texture inspection, and more. [Windows only]Mixed-mode debugging: Step seamlessly between managed (C#) and native (C++) code in Unity projects. [Windows only]Unity Profiler integration is now enabled by default to display profiling results directly in your editor.Unreal EngineShow Usages for UINTERFACE in Blueprints.Code completion for BlueprintGetters and Setters..uproject loader suggestions: Use the native .uproject format instead of generating solution files, simplifying project setup on Windows, Mac, and Linux.GodotFor Godot development, Rider now bundles a new GDScript plugin, providing:Smarter completions for nodes and resources.Ctrl+Click navigation, the Rename refactoring, and Find Usages.Scene Preview tool window with interactive tree, parent method highlights, and performance monitors.Deeper code inspections, stricter untyped value handling, and many fixes for test running and C++ build scenarios.Web and database developmentTypeScript-Go language server supportRider 2025.2 introduces experimental support for the new TypeScript-Go language server, bringing improved performance and modern architecture to TypeScript development.You can enable it in your project by installing the @typescript/native-preview package as a dependency in place of TypeScript. Rider will automatically detect and use the correct language server.SQL project supportRider now provides support for SQL database projects via a new bundled plugin. The plugin works out of the box and delivers a smoother experience when working with SQL database projects.Deprecations and noticesCode coverage for Mono and Unity has been removed in this release due to low usage and high maintenance costs. Coverage may return once Unity adopts CoreCLR.Dynamic Program Analysis (DPA) will be sunset in Rider 2025.3, with parts of its functionality integrated into the new Monitoring tool window for a more consistent performance analysis experience.You can download and install Rider 2025.2 RC from our website right now: Download Rider 2025.2 RC Wed love to hear what you think. If you run into any issues or have suggestions, please let us know here in the comments section, over on our issue tracker, or on our social media X and Bluesky.0 Comments 0 Shares 2 ViewsPlease log in to like, share and comment!

-

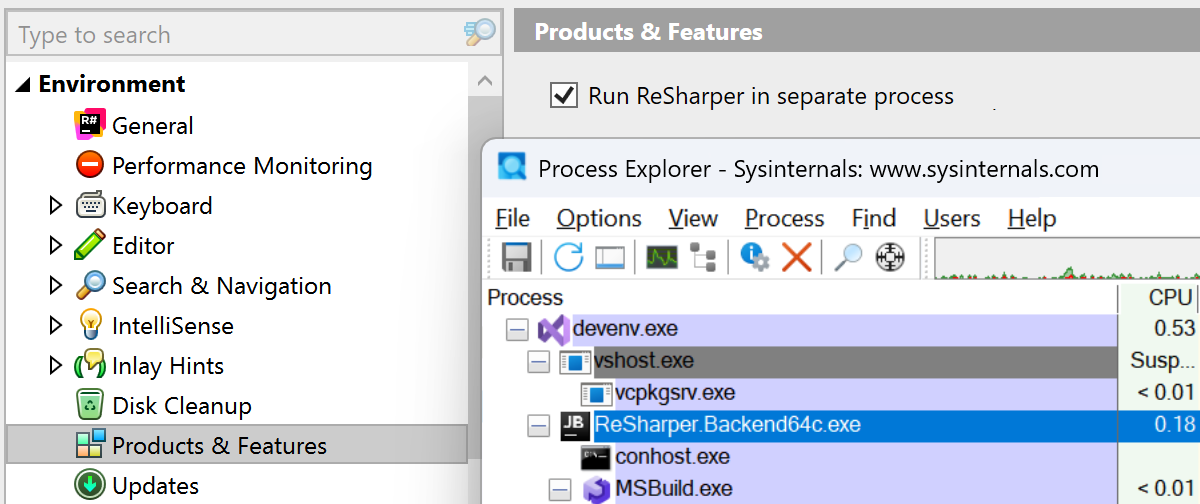

BLOG.JETBRAINS.COMThe ReSharper and .NET Tools 2025.2 Release Candidates Are Now AvailableThe ReSharper and .NET tools 2025.2 Release Candidates are ready for you to try. This release introduces the public preview of ReSharpers Out-of-Process mode, adds support for the latest C# 14 and C++26 features, and brings a range of improvements for performance, refactorings, and inspections.If youd like to try out whats coming in the next stable release, you can download the RC build here: Download ReSharper 2025.2 RC Performance improvementsThis release includes several updates aimed at improving performance and responsiveness:Out-of-Process mode [Public Preview] is an architectural change in ReSharper designed to improve stability and performance by decoupling it from the Visual Studio process. To enable this mode, go to ReSharpers Options | Environment | Products & Features, select Run ReSharper in separate process, click Save, and reinitiate ReSharper to apply the changes.The Rename refactoring is now faster, uses less memory, and offers better progress reporting.Weve reduced the performance impact of inplace refactorings, minimizing interference with typing and improving the overall editing experience.Razor and Blazor processing has been optimized by reducing memory usage and eliminating redundant work on the part of ReSharper.ReSharper now indexes solutions faster on modern hardware by removing HDD-era sequential disk access logic and optimizing for SSDs parallel I/O capabilities.Solution loading has been improved for projects that include references to Source Generators.C# supportReSharper 2025.2 adds initial support for the latest C# 14 features:Extension members (initial support).Partial events and constructors.Null-conditional assignments (e.g. a?.b = c).User-defined compound assignment operators.New preprocessor directives: #! and #:.Logging improvementsReSharper now helps you write more efficient and maintainable logging code with ILogger:A new refactoring lets you convert standard logger calls into [LoggerMessage]-based methods.When using ILogger<T>, ReSharper suggests the current type automatically.Completion is now available for parameters in [LoggerMessage] attributes.ReSharper detects and highlights missing parameters or duplicates in logging declarations.Code qualityCQRS Validation [Experimental]ReSharper 2025.2 introduces experimental inspections to help enforce the Command Query Responsibility Segregation (CQRS) pattern. It detects naming mismatches, context intersections, and conflicts between annotations and naming. Quick-fixes are available to remove redundant attributes or rename entities to follow conventions.CQRS validation is disabled by default and can be enabled in ReSharpersOptions | Code Inspection | CQRS Validation. The required annotations are available inJetBrains.Annotations.Coding productivitySeveral new context actions and inspections are available in this release:Inplace refactorings now appear via inlay hints.A new context action lets you convert a method to a local function.A new inspection detects duplicated switch arms and offers a fix to merge them.Support has been added for [ConstantExpected], with warnings when non-constant values are used.C++ supportReSharper C++ 2025.2 introduces the following updates for modern C++ development:Initial support for C++26 language features.Code insight and completion in code containing multiple #if directives.A new syntax style for keeping definitions sorted by declaration order.Highlighting for global constants, OpenMP variable support, and more. Continuous integrationAs of this release, the TeamCity extension for Visual Studio is being discontinued. This change is intended to reduce long-term maintenance overheads and focus development on the most impactful tooling.Whats new in dotTrace and dotMemory 2025.2 RCDuring our latest release cycle, our efforts surrounding the .NET profiling tools have been focused on improving and elevating their integration into JetBrains Rider. The Monitoring tool inside Rider has been reimagined to provide a more seamless and informative experience when analyzing application performance during development.The reworked Monitoring tool window now features interactive charts for CPU usage, memory consumption, and garbage collection (GC) activity, giving you an at-a-glance view of your applications runtime behavior.From the tool window, you can initiate performance or memory profiling sessions for deeper analysis in the built-in dotTrace and dotMemory profilers or their standalone counterparts. On Windows, the Monitoring tool also automatically detects performance bottlenecks, UI freezes, and GC issues. Detected problems and time intervals selected on the charts can be further investigated in dotTrace.Whats new in dotCover 2025.2 RCThis release introduces performance optimizations and removes support for some technologies with low usage. The following are no longer supported:Mono and Unity projects.IIS Express, WCF, WinRT, external .NET processes, and MAUI.The command-line runner has been updated and modernized:dotcover cover now handles all target types.XML configuration files have been replaced by text files with CLI arguments.The .exe runner has been removed from the NuGet package.dotCover is now available as a .NET tool.Share your feedbackYou can download the latest build right now from the ReSharper 2025.2 EAP page or install it via the JetBrains Toolbox App. Download ReSharper 2025.2 RC Its still not too late to share your feedback on the newest features! Our developers are still putting the final touches on the upcoming release. Tell us what you think in the comments below or by reaching out to us on X or Bluesky.0 Comments 0 Shares 2 Views

BLOG.JETBRAINS.COMThe ReSharper and .NET Tools 2025.2 Release Candidates Are Now AvailableThe ReSharper and .NET tools 2025.2 Release Candidates are ready for you to try. This release introduces the public preview of ReSharpers Out-of-Process mode, adds support for the latest C# 14 and C++26 features, and brings a range of improvements for performance, refactorings, and inspections.If youd like to try out whats coming in the next stable release, you can download the RC build here: Download ReSharper 2025.2 RC Performance improvementsThis release includes several updates aimed at improving performance and responsiveness:Out-of-Process mode [Public Preview] is an architectural change in ReSharper designed to improve stability and performance by decoupling it from the Visual Studio process. To enable this mode, go to ReSharpers Options | Environment | Products & Features, select Run ReSharper in separate process, click Save, and reinitiate ReSharper to apply the changes.The Rename refactoring is now faster, uses less memory, and offers better progress reporting.Weve reduced the performance impact of inplace refactorings, minimizing interference with typing and improving the overall editing experience.Razor and Blazor processing has been optimized by reducing memory usage and eliminating redundant work on the part of ReSharper.ReSharper now indexes solutions faster on modern hardware by removing HDD-era sequential disk access logic and optimizing for SSDs parallel I/O capabilities.Solution loading has been improved for projects that include references to Source Generators.C# supportReSharper 2025.2 adds initial support for the latest C# 14 features:Extension members (initial support).Partial events and constructors.Null-conditional assignments (e.g. a?.b = c).User-defined compound assignment operators.New preprocessor directives: #! and #:.Logging improvementsReSharper now helps you write more efficient and maintainable logging code with ILogger:A new refactoring lets you convert standard logger calls into [LoggerMessage]-based methods.When using ILogger<T>, ReSharper suggests the current type automatically.Completion is now available for parameters in [LoggerMessage] attributes.ReSharper detects and highlights missing parameters or duplicates in logging declarations.Code qualityCQRS Validation [Experimental]ReSharper 2025.2 introduces experimental inspections to help enforce the Command Query Responsibility Segregation (CQRS) pattern. It detects naming mismatches, context intersections, and conflicts between annotations and naming. Quick-fixes are available to remove redundant attributes or rename entities to follow conventions.CQRS validation is disabled by default and can be enabled in ReSharpersOptions | Code Inspection | CQRS Validation. The required annotations are available inJetBrains.Annotations.Coding productivitySeveral new context actions and inspections are available in this release:Inplace refactorings now appear via inlay hints.A new context action lets you convert a method to a local function.A new inspection detects duplicated switch arms and offers a fix to merge them.Support has been added for [ConstantExpected], with warnings when non-constant values are used.C++ supportReSharper C++ 2025.2 introduces the following updates for modern C++ development:Initial support for C++26 language features.Code insight and completion in code containing multiple #if directives.A new syntax style for keeping definitions sorted by declaration order.Highlighting for global constants, OpenMP variable support, and more. Continuous integrationAs of this release, the TeamCity extension for Visual Studio is being discontinued. This change is intended to reduce long-term maintenance overheads and focus development on the most impactful tooling.Whats new in dotTrace and dotMemory 2025.2 RCDuring our latest release cycle, our efforts surrounding the .NET profiling tools have been focused on improving and elevating their integration into JetBrains Rider. The Monitoring tool inside Rider has been reimagined to provide a more seamless and informative experience when analyzing application performance during development.The reworked Monitoring tool window now features interactive charts for CPU usage, memory consumption, and garbage collection (GC) activity, giving you an at-a-glance view of your applications runtime behavior.From the tool window, you can initiate performance or memory profiling sessions for deeper analysis in the built-in dotTrace and dotMemory profilers or their standalone counterparts. On Windows, the Monitoring tool also automatically detects performance bottlenecks, UI freezes, and GC issues. Detected problems and time intervals selected on the charts can be further investigated in dotTrace.Whats new in dotCover 2025.2 RCThis release introduces performance optimizations and removes support for some technologies with low usage. The following are no longer supported:Mono and Unity projects.IIS Express, WCF, WinRT, external .NET processes, and MAUI.The command-line runner has been updated and modernized:dotcover cover now handles all target types.XML configuration files have been replaced by text files with CLI arguments.The .exe runner has been removed from the NuGet package.dotCover is now available as a .NET tool.Share your feedbackYou can download the latest build right now from the ReSharper 2025.2 EAP page or install it via the JetBrains Toolbox App. Download ReSharper 2025.2 RC Its still not too late to share your feedback on the newest features! Our developers are still putting the final touches on the upcoming release. Tell us what you think in the comments below or by reaching out to us on X or Bluesky.0 Comments 0 Shares 2 Views -

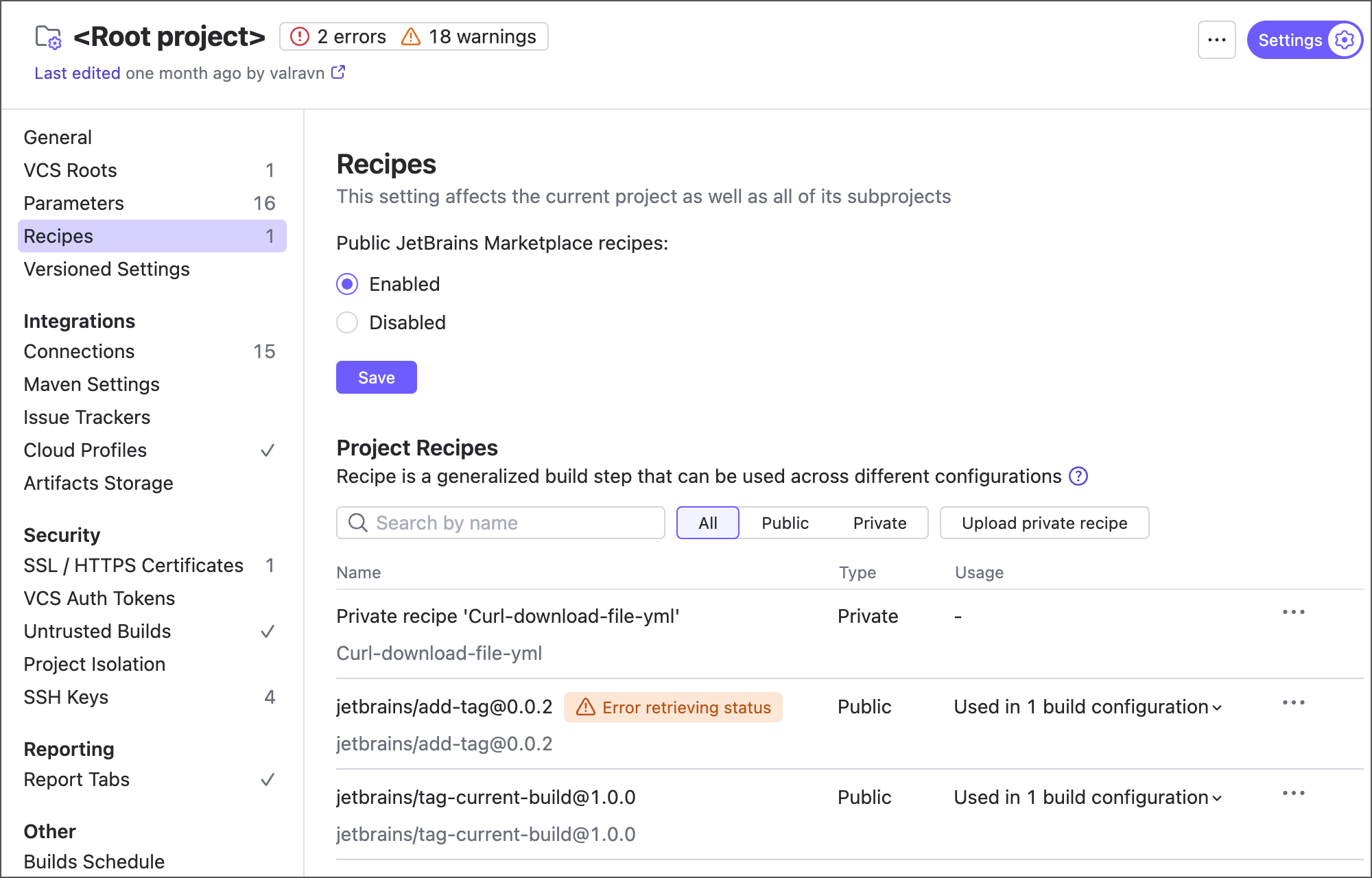

BLOG.JETBRAINS.COMWhats New in TeamCity 2025.07: Public Recipes, Pipelines, Dependency Control, and MoreWere excited to announce the launch of TeamCity 2025.07! This release brings a range of improvements across the UI, Perforce integration, recipes, and more. It is also the first version to include pipelines: a reimagined TeamCity experience that focuses on a healthy balance of functionality and an intuitive design-first workflow.Read on to learn whats new.Public recipesIn version 2025.03, we announced the deprecation of classic TeamCity meta-runners and a shift to fluent, YAML-based recipes. You can now find around 20 JetBrains-authored recipes on JetBrains Marketplace. These extend TeamCitys built-in capabilities with integrations for various tools (AWS CLI, Slack, Node.js, and more) and introduce automation for routine actions like pinning builds, tagging, and publishing artifacts mid-build.This release also introduces support for third-party recipes. You can now upload your own to the Marketplace and explore community-created options. All third-party recipes are verified by JetBrains and certified as safe to use in any project.Learn more: Working with recipesUI updatesAs part of our ongoing effort to simplify and enhance the TeamCity UI, this release introduces several updates. Highlights include a redesigned side panel that can now auto-hide to maximize your workspace, a new Whats New widget to keep you informed of key updates, an improved Projects dropdown menu, and more.TeamCity pipelines now in early accessIn several earlier release cycles, we introduced major UI updates to pave the way for integrating TeamCity Pipelines into the core product. With the 2025.07 update, pipelines are finally available on regular TeamCity servers as part of our Early Access Program (EAP).Pipelines offer an intuitive, user-friendly interface designed to simplify CI/CD setup. Easily configure private container and NPM registry connections in a few clicks, use YAML to create building routines from scratch, manage output files and parameters from a single menu, define job dependencies using our visual editors intuitive drag-and-drop interface, and more.That said, pipelines are still evolving and currently support a very limited number of build steps and features. We expect to deliver the first feature-complete version of this functionality in TeamCity 2025.11. To avoid confusing users who expect the full range of TeamCity capabilities without compromise, weve opted to keep pipelines initially hidden from the main TeamCity UI.If youre ready to give the pipelines EAP a try, head over to the dedicated page or click the Join Early Access program link in the TeamCity UI. Youll find a transparent overview of current features, limitations, and our roadmap. Leave your email, and well send you simple setup instructions it only takes a few clicks and doesnt require you install any additional patches or software beyond your 2025.07 server. Sign up for early access Project isolationBy default, any projects build configuration can add snapshots and artifact dependencies to your configurations. This means any external project can start new builds and/or import artifacts produced by your configuration.Starting with version 2025.07, you can isolate sensitive configurations using the corresponding Project isolation section of your project settings.Learn more: Project isolationKubernetes Executor updatesIntroduced several releases ago, Kubernetes executor leverages your existing Kubernetes clusters by turning them into independent orchestrators for TeamCity builds. Unlike with regular cloud agents that are fully managed by TeamCity, this integration allows the server to offload the build queue to a k8s cluster, granting the latter full control over the pod lifecycle.TeamCity 2025.07 introduces a range of Kubernetes executor updates:Executors are now natively integrated into TeamCitys default prioritization mechanism. When a build is queued, TeamCity first checks for a free self-hosted agent, then for cloud profiles that can launch a compatible agent. If none are available, the build is offloaded to an executor.Implicit agent requirements are now correctly recognized. Build steps can impose implicit tooling requirements on agents, like requiring Docker or Podman for containerized steps, or the .NET 8 SDK for .NET builds. As of 2025.07, TeamCity can correctly match these requirements with pod specifications, ensuring builds are never offloaded to executors that cannot run them.There are numerous bug fixes for various issues, such as TeamCity ignoring the maximum build limit, PowerShell steps failing to run, excessive build log errors, and more.Learn more: Kubernetes ExecutorEnhanced build approval conditionsBefore version 2025.07, each build approval rule was treated as a separate condition. All conditions had to be met for the build to proceed. For instance, the following setup requires four votes: two from the QA group, one from a team lead, and one from a project admin:user:teamleaduser:projectadmingroup:QA:2Starting with TeamCity 2025.07, you can merge individual users and groups into a single entity with a shared vote count. The following rule now only requires any two votes from members of the specified groups:(groups:QA,users:teamlead,projectadmin):2This allows flexible combinations, such as two QA members, one QA member and a team lead, etc.Learn more: Build approvalPerforce integration enhancementsSupport for multiple shelve triggersBuild configurations now support multiple Perforce shelve triggers. Previously, adding one trigger blocked the option to add more via the TeamCity UI.Clear TeamCity-generated workspacesYou can now clear both agent and server workspaces created by TeamCity.Configurations with stream-based Perforce VCS roots now feature an updated Delete Perforce Workspaces dialog. It lets you remove inactive workspaces created by build agents, with the inactivity period (in days) selectable within the dialog.The Perforce Administrator Access connection and Perforce VCS root settings now include an option to automatically remove workspaces created by TeamCity build agents. Enabling this in the VCS root clears workspaces from both bare-metal and cloud agents after builds finish, while the connection setting removes cloud agent workspaces during scheduled server clean-ups.Miscellaneous enhancementsArtifacts with identical names produced by different batches of parallel tests are now shown in separate tabs. Previously, the Artifacts tab showed only the most recently produced artifact.TeamCity now recognizes the <kotlin.compiler.incremental>true</kotlin.compiler.incremental> line in DSL pom.xml files, which allows you to enable incremental compilation for your Kotlin scripts.You can now override the server callback URL that TeamCity OAuth connections utilize by setting the new teamcity.oauth.redirectRootUrlOverride internal property.SSH keys uploaded or generated in TeamCity are now stored in encrypted form, reducing the risk if the data directory is compromised. Keys added before the server upgrade remain unencrypted, so you must re-upload them to enable encryption.0 Comments 0 Shares 3 Views

BLOG.JETBRAINS.COMWhats New in TeamCity 2025.07: Public Recipes, Pipelines, Dependency Control, and MoreWere excited to announce the launch of TeamCity 2025.07! This release brings a range of improvements across the UI, Perforce integration, recipes, and more. It is also the first version to include pipelines: a reimagined TeamCity experience that focuses on a healthy balance of functionality and an intuitive design-first workflow.Read on to learn whats new.Public recipesIn version 2025.03, we announced the deprecation of classic TeamCity meta-runners and a shift to fluent, YAML-based recipes. You can now find around 20 JetBrains-authored recipes on JetBrains Marketplace. These extend TeamCitys built-in capabilities with integrations for various tools (AWS CLI, Slack, Node.js, and more) and introduce automation for routine actions like pinning builds, tagging, and publishing artifacts mid-build.This release also introduces support for third-party recipes. You can now upload your own to the Marketplace and explore community-created options. All third-party recipes are verified by JetBrains and certified as safe to use in any project.Learn more: Working with recipesUI updatesAs part of our ongoing effort to simplify and enhance the TeamCity UI, this release introduces several updates. Highlights include a redesigned side panel that can now auto-hide to maximize your workspace, a new Whats New widget to keep you informed of key updates, an improved Projects dropdown menu, and more.TeamCity pipelines now in early accessIn several earlier release cycles, we introduced major UI updates to pave the way for integrating TeamCity Pipelines into the core product. With the 2025.07 update, pipelines are finally available on regular TeamCity servers as part of our Early Access Program (EAP).Pipelines offer an intuitive, user-friendly interface designed to simplify CI/CD setup. Easily configure private container and NPM registry connections in a few clicks, use YAML to create building routines from scratch, manage output files and parameters from a single menu, define job dependencies using our visual editors intuitive drag-and-drop interface, and more.That said, pipelines are still evolving and currently support a very limited number of build steps and features. We expect to deliver the first feature-complete version of this functionality in TeamCity 2025.11. To avoid confusing users who expect the full range of TeamCity capabilities without compromise, weve opted to keep pipelines initially hidden from the main TeamCity UI.If youre ready to give the pipelines EAP a try, head over to the dedicated page or click the Join Early Access program link in the TeamCity UI. Youll find a transparent overview of current features, limitations, and our roadmap. Leave your email, and well send you simple setup instructions it only takes a few clicks and doesnt require you install any additional patches or software beyond your 2025.07 server. Sign up for early access Project isolationBy default, any projects build configuration can add snapshots and artifact dependencies to your configurations. This means any external project can start new builds and/or import artifacts produced by your configuration.Starting with version 2025.07, you can isolate sensitive configurations using the corresponding Project isolation section of your project settings.Learn more: Project isolationKubernetes Executor updatesIntroduced several releases ago, Kubernetes executor leverages your existing Kubernetes clusters by turning them into independent orchestrators for TeamCity builds. Unlike with regular cloud agents that are fully managed by TeamCity, this integration allows the server to offload the build queue to a k8s cluster, granting the latter full control over the pod lifecycle.TeamCity 2025.07 introduces a range of Kubernetes executor updates:Executors are now natively integrated into TeamCitys default prioritization mechanism. When a build is queued, TeamCity first checks for a free self-hosted agent, then for cloud profiles that can launch a compatible agent. If none are available, the build is offloaded to an executor.Implicit agent requirements are now correctly recognized. Build steps can impose implicit tooling requirements on agents, like requiring Docker or Podman for containerized steps, or the .NET 8 SDK for .NET builds. As of 2025.07, TeamCity can correctly match these requirements with pod specifications, ensuring builds are never offloaded to executors that cannot run them.There are numerous bug fixes for various issues, such as TeamCity ignoring the maximum build limit, PowerShell steps failing to run, excessive build log errors, and more.Learn more: Kubernetes ExecutorEnhanced build approval conditionsBefore version 2025.07, each build approval rule was treated as a separate condition. All conditions had to be met for the build to proceed. For instance, the following setup requires four votes: two from the QA group, one from a team lead, and one from a project admin:user:teamleaduser:projectadmingroup:QA:2Starting with TeamCity 2025.07, you can merge individual users and groups into a single entity with a shared vote count. The following rule now only requires any two votes from members of the specified groups:(groups:QA,users:teamlead,projectadmin):2This allows flexible combinations, such as two QA members, one QA member and a team lead, etc.Learn more: Build approvalPerforce integration enhancementsSupport for multiple shelve triggersBuild configurations now support multiple Perforce shelve triggers. Previously, adding one trigger blocked the option to add more via the TeamCity UI.Clear TeamCity-generated workspacesYou can now clear both agent and server workspaces created by TeamCity.Configurations with stream-based Perforce VCS roots now feature an updated Delete Perforce Workspaces dialog. It lets you remove inactive workspaces created by build agents, with the inactivity period (in days) selectable within the dialog.The Perforce Administrator Access connection and Perforce VCS root settings now include an option to automatically remove workspaces created by TeamCity build agents. Enabling this in the VCS root clears workspaces from both bare-metal and cloud agents after builds finish, while the connection setting removes cloud agent workspaces during scheduled server clean-ups.Miscellaneous enhancementsArtifacts with identical names produced by different batches of parallel tests are now shown in separate tabs. Previously, the Artifacts tab showed only the most recently produced artifact.TeamCity now recognizes the <kotlin.compiler.incremental>true</kotlin.compiler.incremental> line in DSL pom.xml files, which allows you to enable incremental compilation for your Kotlin scripts.You can now override the server callback URL that TeamCity OAuth connections utilize by setting the new teamcity.oauth.redirectRootUrlOverride internal property.SSH keys uploaded or generated in TeamCity are now stored in encrypted form, reducing the risk if the data directory is compromised. Keys added before the server upgrade remain unencrypted, so you must re-upload them to enable encryption.0 Comments 0 Shares 3 Views -

IntelliJ IDEA 2025.1.4 Is Out!Weve just released another update for v2025.1.You can update to this version from inside the IDE, using the Toolbox App, or by using snaps if you are a Ubuntu user. You can also download it from our website.This version brings the following refinements:Closing labels in Dart have been updated for better usability and now appear as inlay hints, with improved configuration options. [IDEA-289260]The Final declaration cant be overridden at runtime warning no longer falsely appears for @Configuration classes with proxyBeanMethods. [IDEA-304648]It is once again possible to override variables from imported .http files in the HTTP Client. [IJPL-190688]Artifacts with circular dependencies between .main and .testwar exploded types can now be built correctly when working with Maven projects. [IDEA-371537]To see the full list of issues addressed in this version, please refer to the release notes.If you encounter any bugs, please report them using our issue tracker.Happy developing!0 Comments 0 Shares 5 Views

-

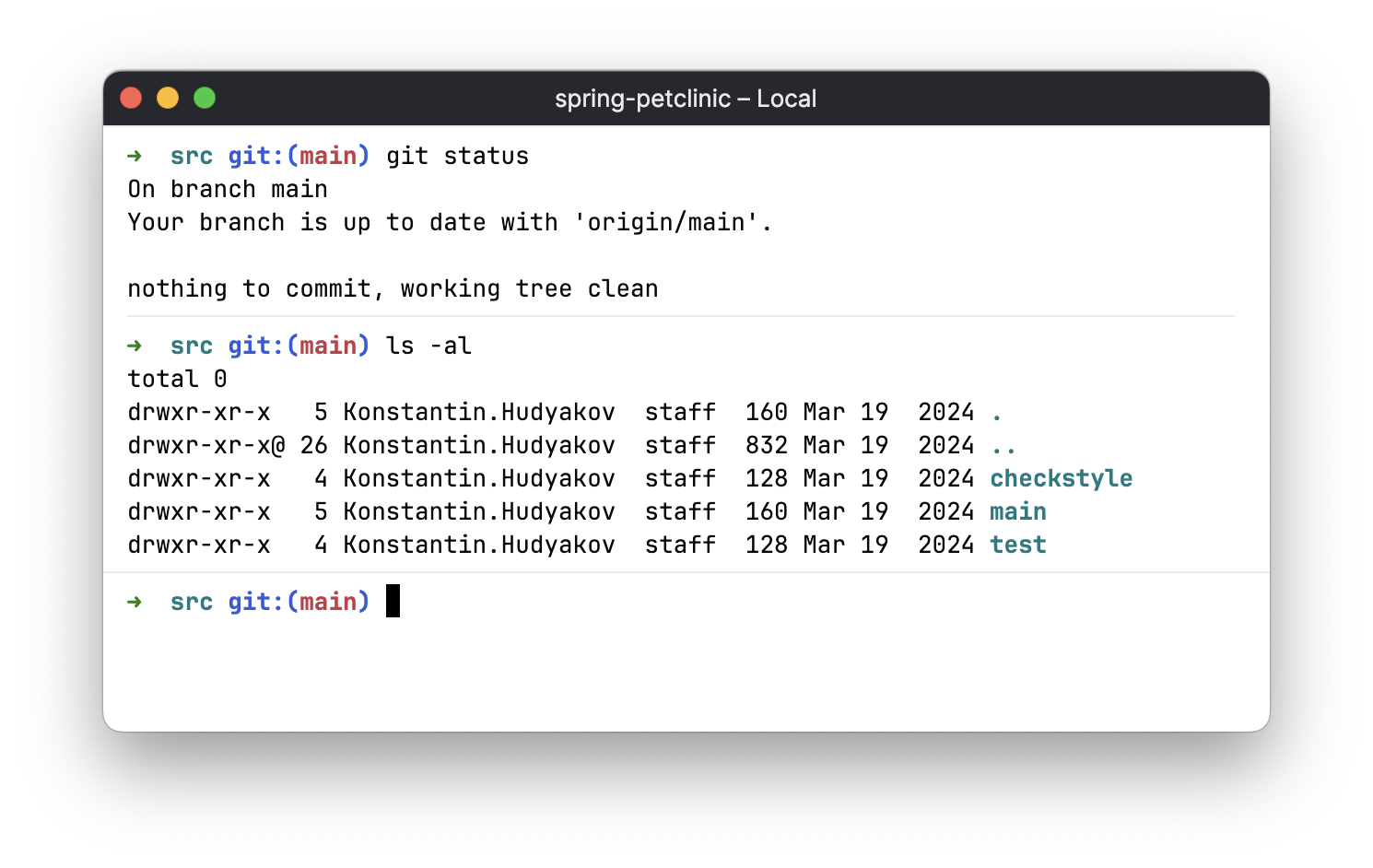

BLOG.JETBRAINS.COMThe Reworked Terminal Becomes the Default in 2025.2Starting from 2025.2, the default terminal implementation is now the reworked terminal. To create this new implementation, the classic terminal was rewritten almost from scratch to provide the technical foundation for new terminal features like visual separation of executed commands, AI integration, and the ability to work seamlessly in remote development scenarios.Functionally, it is still the same terminal, but it comes with some novel visual additions.Separators between executed commands (Bash and Zsh)Weve added separators between executed commands to make it easier to distinguish where commands start and end.If you wish to hide these separators, you can do so by going to Settings | Tools | Terminal and disabling the Show separators between executed commands option.For now, this feature is only available when running Bash or Zsh, but we plan to implement it for PowerShell in the next release. You can follow this YouTrack issue for updates.Updated color schemeWe have updated the color schemes in both light and dark themes to improve the readability of command outputs and alternate buffer app renderings.If necessary, you can customize these color schemes in Settings | Editor | Color scheme | Console colors | Reworked terminal. If you experience any specific color issues, we encourage you to report these on IJPL-187347.PerformanceImproving performance was one of our main goals, and the reworked terminal is now several times more performant on fast command outputs than the classic terminal.Remote developmentThe reworked terminal was built considering the architectural requirements necessary to provide the same user experience and quality in both local and remote development.Classic terminal still available for backward compatibilityWe tried our best to achieve feature parity with the classic implementation, but if some scenarios are not covered in the reworked terminal, you can switch to the classic terminal via the Terminal engine option in Settings | Tools | Terminal.There are many internal and external dependencies on the Classic Terminal API, so it will remain available for at least two more releases to ensure a smooth transition both for users and plugin writers.Some functionalities in third-party plugins that rely on the classic terminal implementation might be limited in 2025.2. This might affect plugins that get text from, execute commands in, and add custom key bindings to the classic terminal. We already informed plugin vendors about API changes and published some technical details in the platform community forum.A work in progressWere still working on further terminal improvements and would be grateful for your input.If you have any feedback, feel free to submit it either from the IDE by clicking Share your feedback in the three-dot menu in the Terminal tool window or by submitting a new ticket to our bug tracker.0 Comments 0 Shares 84 Views

BLOG.JETBRAINS.COMThe Reworked Terminal Becomes the Default in 2025.2Starting from 2025.2, the default terminal implementation is now the reworked terminal. To create this new implementation, the classic terminal was rewritten almost from scratch to provide the technical foundation for new terminal features like visual separation of executed commands, AI integration, and the ability to work seamlessly in remote development scenarios.Functionally, it is still the same terminal, but it comes with some novel visual additions.Separators between executed commands (Bash and Zsh)Weve added separators between executed commands to make it easier to distinguish where commands start and end.If you wish to hide these separators, you can do so by going to Settings | Tools | Terminal and disabling the Show separators between executed commands option.For now, this feature is only available when running Bash or Zsh, but we plan to implement it for PowerShell in the next release. You can follow this YouTrack issue for updates.Updated color schemeWe have updated the color schemes in both light and dark themes to improve the readability of command outputs and alternate buffer app renderings.If necessary, you can customize these color schemes in Settings | Editor | Color scheme | Console colors | Reworked terminal. If you experience any specific color issues, we encourage you to report these on IJPL-187347.PerformanceImproving performance was one of our main goals, and the reworked terminal is now several times more performant on fast command outputs than the classic terminal.Remote developmentThe reworked terminal was built considering the architectural requirements necessary to provide the same user experience and quality in both local and remote development.Classic terminal still available for backward compatibilityWe tried our best to achieve feature parity with the classic implementation, but if some scenarios are not covered in the reworked terminal, you can switch to the classic terminal via the Terminal engine option in Settings | Tools | Terminal.There are many internal and external dependencies on the Classic Terminal API, so it will remain available for at least two more releases to ensure a smooth transition both for users and plugin writers.Some functionalities in third-party plugins that rely on the classic terminal implementation might be limited in 2025.2. This might affect plugins that get text from, execute commands in, and add custom key bindings to the classic terminal. We already informed plugin vendors about API changes and published some technical details in the platform community forum.A work in progressWere still working on further terminal improvements and would be grateful for your input.If you have any feedback, feel free to submit it either from the IDE by clicking Share your feedback in the three-dot menu in the Terminal tool window or by submitting a new ticket to our bug tracker.0 Comments 0 Shares 84 Views -

BLOG.JETBRAINS.COMIntelliJ IDEA 2025.2 Beta: EAP Closure and Our New Approach to Release UpdatesThe Beta version of IntelliJ IDEA 2025.2 is now available, marking the end of our Early Access Program for this release cycle.Download IntelliJ IDEA 2025.2 BetaThank you to everyone who used the EAP builds, shared feedback, and helped shape this release. As we mentioned in the EAP opening post, we have moved away from weekly update blog posts to avoid overwhelming you with news about small, incremental changes and focus on what truly matters in each release.Even without weekly highlights, we continued to receive valuable input and saw steady engagement throughout the eight weeks of the EAP, and we really appreciate your involvement.Our new approach to sharing release updatesAlongside our work on the product, weve also been rethinking how we communicate whats new in each release in a more structured and thoughtful format.Now is a good time to give you a preview of this new approach and revisit some of the product priorities we outlined at the beginning of the EAP.Starting with 2025.2, were splitting communications into two distinct, but equally important, parts:The Whats New page will now provide a focused overview of the most impactful updates.In this release, the highlights include early support for emerging technologies like Java 25, Maven 4, and JSpecify, enhanced Spring development support with the new Spring Debugger and Spring Modulith, an improved AI-assisted development experience, and more.Whats Fixed is a new dedicated blog post where we highlight meaningful quality improvements that make your daily work smoother.For 2025.2, it includes updates to Spring + Kotlin support, functionality for working with WSL, remote development, Junie and AI Assistant, Maven execution, overall performance, and moreThese are just some of the highlights. Well share the full list of features and fixes in the final release materials.As always, the release notes will give you a comprehensive list of the changes.Were also preparing a special 2025.2 Release Highlights livestream with our Developer Advocates, where theyll walk through the key new features and show how they work in real-world scenarios. Keep an eye out well share the date soon!Getting ready for the 2025.2 releaseWith the Beta now out, were focusing on final polishing and stability improvements. As always, all changes are tracked in the release notes. Stay tuned for the full rollout of our new update format, including the detailed Whats New and Whats Fixed posts, plus the livestream with the team.We encourage you to try out IntelliJ IDEA 2025.2 Beta and let us know what you think by dropping a line in the comments or contacting us on X or LinkedIn. If you run into any issues, please report them in our issue tracker.Thanks again for being part of the IntelliJ IDEA community, and happy developing!0 Comments 0 Shares 89 Views

BLOG.JETBRAINS.COMIntelliJ IDEA 2025.2 Beta: EAP Closure and Our New Approach to Release UpdatesThe Beta version of IntelliJ IDEA 2025.2 is now available, marking the end of our Early Access Program for this release cycle.Download IntelliJ IDEA 2025.2 BetaThank you to everyone who used the EAP builds, shared feedback, and helped shape this release. As we mentioned in the EAP opening post, we have moved away from weekly update blog posts to avoid overwhelming you with news about small, incremental changes and focus on what truly matters in each release.Even without weekly highlights, we continued to receive valuable input and saw steady engagement throughout the eight weeks of the EAP, and we really appreciate your involvement.Our new approach to sharing release updatesAlongside our work on the product, weve also been rethinking how we communicate whats new in each release in a more structured and thoughtful format.Now is a good time to give you a preview of this new approach and revisit some of the product priorities we outlined at the beginning of the EAP.Starting with 2025.2, were splitting communications into two distinct, but equally important, parts:The Whats New page will now provide a focused overview of the most impactful updates.In this release, the highlights include early support for emerging technologies like Java 25, Maven 4, and JSpecify, enhanced Spring development support with the new Spring Debugger and Spring Modulith, an improved AI-assisted development experience, and more.Whats Fixed is a new dedicated blog post where we highlight meaningful quality improvements that make your daily work smoother.For 2025.2, it includes updates to Spring + Kotlin support, functionality for working with WSL, remote development, Junie and AI Assistant, Maven execution, overall performance, and moreThese are just some of the highlights. Well share the full list of features and fixes in the final release materials.As always, the release notes will give you a comprehensive list of the changes.Were also preparing a special 2025.2 Release Highlights livestream with our Developer Advocates, where theyll walk through the key new features and show how they work in real-world scenarios. Keep an eye out well share the date soon!Getting ready for the 2025.2 releaseWith the Beta now out, were focusing on final polishing and stability improvements. As always, all changes are tracked in the release notes. Stay tuned for the full rollout of our new update format, including the detailed Whats New and Whats Fixed posts, plus the livestream with the team.We encourage you to try out IntelliJ IDEA 2025.2 Beta and let us know what you think by dropping a line in the comments or contacting us on X or LinkedIn. If you run into any issues, please report them in our issue tracker.Thanks again for being part of the IntelliJ IDEA community, and happy developing!0 Comments 0 Shares 89 Views -

Ktor 3.2.2 Is Now AvailableThe Ktor 3.2.2 patch release brings a critical fix for Android D8 compatibility, along with some minor enhancements and bug fixes. Get startedReady to explore Ktor 3.2.2? Start building your next project today with our interactive project generator at start.ktor.io. Your feedback and contributions are always welcome! Get Started With Ktor | Join the Community on Reddit and Slack Get Started With Ktor Fixed Android D8 compatibilityKtor 3.2.0 introduced a compatibility issue for Androids D8 by using Kotlins backticked identifiers, which are commonly used in testing and allow for non-alphanumeric characters in Kotlin names. Androids D8 tool, which converts bytecode to DEX (Dalvik executable) format, only supports these backticked identifiers on Android 10 (API level 30) and newer.@Testfun `hello world returns 200`() = testApplication { routing { get("/hello") { call.respondText("Hello World!") } } val response = client.get("/hello") assertEquals(HttpStatusCode.OK, response.status)}This regression is fixed in Ktor 3.2.2. To prevent this from happening again, weve added new checks to our testing infrastructure. These tests continuously verify Ktors compatibility with essential Android tools, including D8 and ProGuard, ensuring new features dont break support for older Android versions. More details can be found on YouTrack and on GitHub.Minor bug fixes and enhancementsImprovementsThymeleaf: The template model now accepts null values (KTOR-8559).javadoc now published as a Maven artifact (KTOR-3962).Netty: An invalid hex byte with a malformed query string now returns the expected 400 Bad Request error instead of a 500 Internal Server Error. (KTOR-2934).It automatically closes AutoCloseable instances or allows to configure your own cleanup handlers. When the server receives a stop command, the HikariDataSource will close automatically, and then the custom cleanup handler for Database will be executed.Regression fixesA Space characters in SimpleName error is no longer triggered when executing an R8 mergeExtDex task with 3.2.0 (KTOR-8583).A potential race condition no longer occurs in socket.awaitClosed (KTOR-8618).The module parameter type Application.() -> kotlin.Unit is now supported (KTOR-8602).Collecting flow in a Ktor route no longer results in a Flow invariant is violated error (KTOR-8606).Bug fixesForwardedHeaders: The plugin now handles parameters case-insensitively, as expected (KTOR-8622).OkHttp: java.net.ProtocolException no longer occurs when sending MultiPartFormDataContent with onUpload (KTOR-6790).The OAuth2 authentication provider no longer breaks form-urlencoded POST requests when receiving the request body (KTOR-4420).The You can learn more from Content negotiation and serialization KDoc link for io.ktor.server.plugins.contentnegotiation.ContentNegotiation now leads to the correct page (KTOR-8597).Ktor no longer fails to boot with the default jvminline argument (KTOR-8608).The ResponseSent hook handler of the plugin installed into a route is now executed as expected when an exception is thrown from the route (KTOR-6794). Thank you!We want to thank everyone in the community for your support and feedback, as well as for reporting issues.Start building your next project at start.ktor.io. Your suggestions and contributions are always welcome! Get Started With Ktor | Join the Community on Reddit and Slack0 Comments 0 Shares 117 Views

-

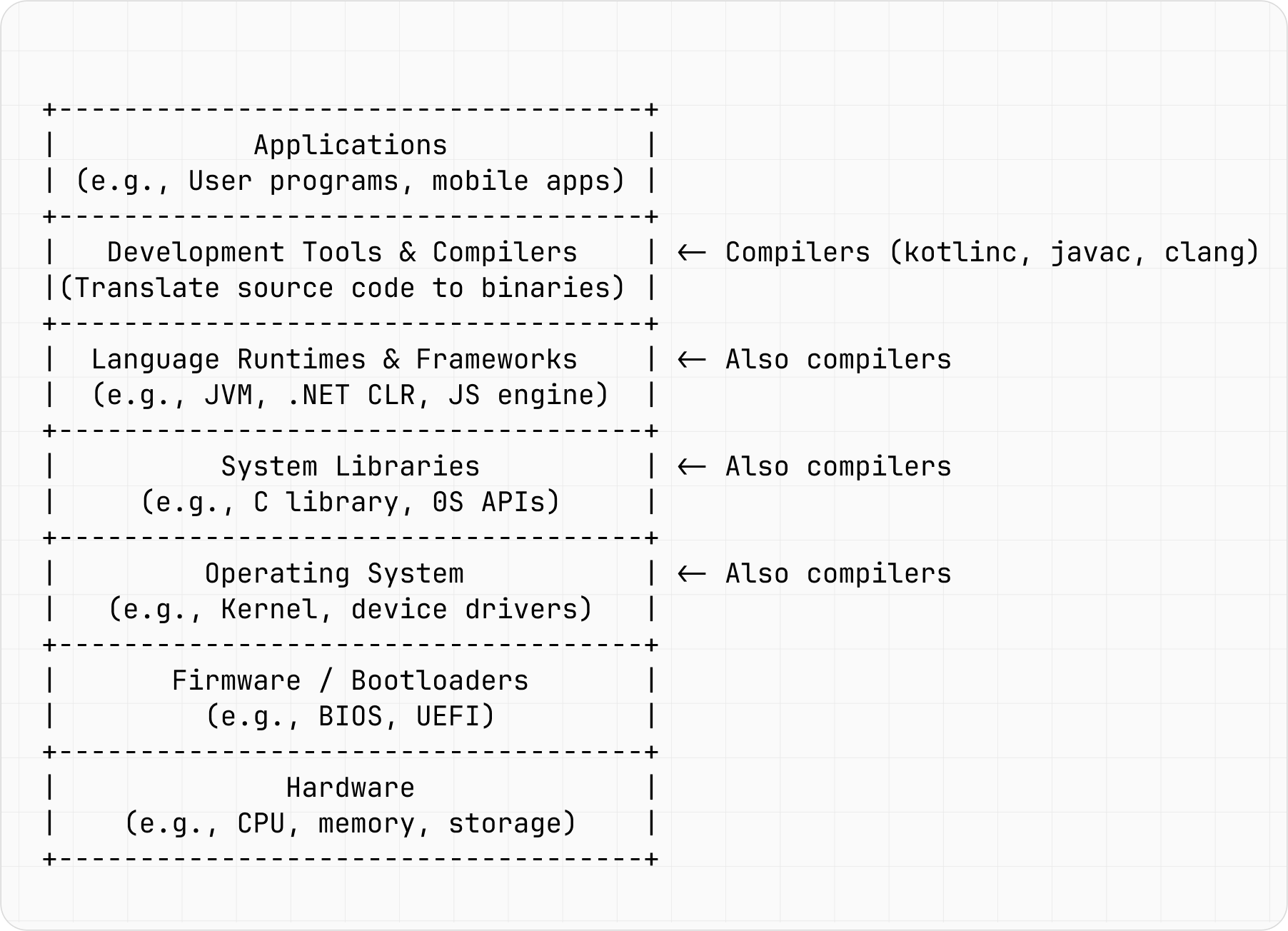

BLOG.JETBRAINS.COMBreaking to Build: Fuzzing the Kotlin CompilerAt JetBrains, we care about Kotlin compiler quality. One powerful way to test it? Fuzzing, an approach that feeds programs unexpected, often random, inputs to uncover bugs that traditional tests may miss. It may sound chaotic, but it works, especially for complex software like compilers.In a previous post, our colleagues introduced kotlinx.fuzz, a powerful tool for uncovering bugs in Kotlin programs. As the Kotlin compiler is a Kotlin program, we can use fuzzing to test it, too. In this post we will:Explain what fuzzing is and how it compares to traditional compiler testing techniques.Show how we applied it to the Kotlin compiler in collaboration with TU Delft.Share real bugs we caught and fixed, including in the new K2 compiler.Compiler fuzzing vs. traditional compiler testing techniquesCompilers are essential to software development, as they transform the code we write into instructions that machines can read and then execute. Compilers are used not once, but multiple times throughout the whole software development stack. Without them you cannot compile your operating system kernel, your system library, or your JavaScript engine. Figure 1 points out where compilers are needed, underlining how crucial they are.Figure 1: The importance of compilers in the software development stack.Figure 1 shows that compilers are not just an isolated development tool but are also needed to compile your source code in other places in the stack. This means that when something as important as a compiler contains bugs, it can cause bigger problems, such as:Security holes or vulnerabilitiesIncorrect code generation, subtly inconsistent with the original source codePerformance issues via mistriggered optimizationCompiler crashesSo how do we ensure that compilers are free of errors? The rest of this section will outline some ways to detect bugs, starting with more traditional techniques and then moving on to fuzzing.Uncovering bugs: Traditional techniquesTraditional testing techniques focus on program behavior, namely, whether it produces correct output with valid input while properly handling any errors with invalid input. Table 1 lists some common methods.WhatHowUnit testsCheck individual components (e.g., lexer, parser, code generator)Functional testsVerify that all compiler components behave as expectedGold verification tests (regression tests)Compile a suite of known programs and compare compilation result to the expected output (i.e., gold standard)Integration testsCheck whether compiler runs correctly with other tools or systems (e.g., build systems, linkers, or runtime environments)Cross-platform testsTest compiler on different operating systems or platforms for consistencyTable 1: Traditional testing techniques.These traditional techniques are essential for compiler development, but they may miss subtle bugs that only appear under specific circumstances. This is where advanced techniques like fuzzing can be more successful.Fuzzing, or the art of breaking things to make them betterAt its heart, fuzzing is based on a very simple idea: lets throw a lot of random inputs at a program to see if it breaks. Long before fuzzing was adopted as a named technique, developers have been known to use a form of it. For example, programmers in the 1950s could test programs with a trash-deck technique: they input punch card decks from the trash or random-number punch cards to see when undesirable behavior might occur.While working on the Apple Macintosh in 1983, Steve Capps developed a tool to test MacWrite and MacPaint applications, which at the time were battling low memory conditions. Called The Monkey, it fed random events to an application and caused the computer (in this case, the Macintosh) to behave as if it were operated by an incredibly fast, somewhat angry monkey, banging away at the mouse and keyboard, generating clicks and drags at random positions with wild abandon. To increase testing quality, Capps limited some random events, like menu commands, to a certain percentage, and later Bill Atkinson defined a system flag to disable commands such as quit, so the test could run as long as possible. Although the tool itself became obsolete when later Macintosh iterations freed up more memory, it is an important part of software testing history.The term fuzzing is credited to Professor Barton Miller, who first experienced interference noise on a dial-up link when remotely logging into a Unix system during a storm in 1988. This noise caused the programs to crash, a surprise considering they were common Unix utilities. So that this effect could be explored more in depth, Miller then assigned his students a project to evaluate the robustness of various Unix utility programs, given an unpredictable input stream. One student group went on to publish a 1990 paper with Miller on their findings.Fuzzing fundamentalsIn contrast to carefully designed test cases like those in Table 1 above, fuzzing uses randomly generated inputs that can be unexpected or even deliberately nonsensical. The more diverse the inputs, the more likely the fuzzing tool (i.e., the fuzzer) will be to find unexpected issues.A fuzzer has three essential components: (i) an input data generator, which generates diverse inputs, (ii) the software under test (sometimes abbreviated as SUT), which processes these inputs, and (iii) a reference model, which produces the correct output from the same inputs. Both the software and the reference model produce outputs from the same inputs, and these outputs are compared to one another. Figure 2 depicts the relationship between the three components, the outputs to be compared, and the fuzzing result.Figure 2: Fuzzing basics.The fuzzing result tells us whether or not the software performed correctly with the automatically generated input, as compared to the reference model with that same input. This process is repeated over and over, represented in the above image by multiple windows. This repetition ensures that the inputs used for testing are sufficiently diverse.The idea is to find inputs which cause the software to fail, either by crashing or producing incorrect results. Its not so much about testing the intended functionality. That is, fuzzing investigates the edge cases of what the software can or cannot handle.Compiler fuzzing techniquesSo far, weve covered the basic idea of fuzzing and a brief history of it. In this section, we will get more specific, starting with different ways to fuzz compilers.Two fuzzing types that are more advanced are generative fuzzing, which constructs test programs from scratch based on grammar or specification of the target inputs, and mutation-based fuzzing, which starts with existing, valid, programs and modifies them to create new test inputs. Modifications (mutations) can include inserting, deleting, or replacing input parts. Table 2 lists pros and cons for each.Table 2: A comparison of generative and mutation-based fuzzing.Figure 3 further compares the two techniques to basic fuzzing, underlining their trade-offs on how well they cover different parts of the compiler vs. how deep they can explore it.Figure 3: A comparison of fuzzing types.This section has described fuzzing basics and how fuzzing is an effective method for ensuring a high standard in compiler quality. Going forward, we will focus on fuzzing the Kotlin compiler specifically.Kotlin compiler fuzzingFuzzing is already a widespread practice for many programming languages, from C++ and JS to Java. At JetBrains, our Kotlin team is no stranger to fuzzing the Kotlin compiler. We have collaborated with external research groups to try to break the compiler with differing fuzzing approaches. One of the first collaborations gave us a great example of how to crash kotlinc using 8 characters and the main function. This is shown below.fun main() {(when{}) // Crash in Kotlin 1.3, fixed in 1.5}You can also access the example on Kotlin Playground in Kotlin 1.3 and Kotlin 1.5 versions, i.e., before and after the bug was fixed. The following code blocks contain two more examples of bugs found by fuzzing.fun <break> foo() // Compilation crash on type parameter named `break`fun box(){foo()}This example shows a compiler crash when it encounters a reserved identifier (such as break) in the position of a type parameter.fun box(): String {return if (true) {val a = 1 // No error when we are expected to return a `String`} else {"fail:"}}fun main(args: Array<String>) {println(box())}The above example shows a miscompilation of when the compiler failed to reject an incorrect program: the if expression should return a String, but the true branch does not return anything.Figure 4 displays an incomplete trophy list of found-by-fuzzer bugs, demonstrating fuzzings usefulness. Many of the reported bugs greatly helped us in the K2 compiler stabilization.Figure 4: Incomplete trophy list of found-by-fuzzer bugs.Evolutionary generative fuzzingLast year, we collaborated with TU Delfts SERG team to more rigorously explore the properties of generative fuzzing. In this 2024 paper, we looked at generative fuzzing as an evolutionary algorithm problem and developed a fuzzing approach: Evolutionary Generative Fuzzing. This subsection will describe our approach in more detail.First, lets break down the term evolutionary generative fuzzing. As discussed above, generative fuzzing is a bottom-up approach to creating program inputs, generating them following a specific set of rules. In the present case, the generation rules are based on the language syntax, or the Kotlin grammar, and its semantics, or the Kotlin specification.The terms evolutionary part comes from evolutionary algorithms, an approach that simulates the real evolutionary process. Evolutionary algorithms and a common subtype, genetic algorithms, are optimization techniques that use natural selection and genetic inheritance principles for solving complex problems in programming.First we will talk about the basic concepts of a genetic algorithm, then we will apply these concepts to fuzzing. Essentially, these types of algorithms are meant to mimic the way living organisms evolve over generations to adapt and survive in their environments. Figure 5 depicts this cycle.Figure 5: Genetic algorithm cycle.As a problem-solving technique, a genetic algorithm starts with a specific problem, and for this problem there are multiple candidate solutions. Each solution represents an individual (sometimes called chromosome), which could be, for example, a specific input for a function. A set of individuals represents a population, just like in the natural world. To begin the algorithm, an initial population must be generated, typically a set of random individuals (i.e., candidate solutions). After the initial population is generated, a fitness function evaluates the suitability of each individual within the population, only retaining those individuals most the most fit to move on to the next generation. Then, the set of fit individuals mutate and recombine to form a new population. This new combination of individuals make up the next generations population.Lets apply this to our Kotlin compiler fuzzing approach, represented in the more specialized version of the evolutionary cycle in Figure 6. The lighter-colored and smaller rectangles attached to the larger ones represent the relevant action needed to reach each stage.Figure 6: Genetic algorithm in the evolutionary generative fuzzing approach.To begin, the individuals are Kotlin code snippets created with generative fuzzing. To create the initial population, we sample multiple code snippets, which are then evaluated by a fitness function. And to simulate reproduction, we split each snippet into code blocks, where each block represents an individuals chromosome. These code-block chromosomes then mutate and recombine to create new code snippets, which are sampled to create the next generations population. An important takeaway of the approach is: The evolutionary generative fuzzer is not purely generative: it is also partially mutation-based.As mentioned above, a fitness function plays an integral part in the evolutionary cycle. In this work we hypothesized that structurally diverse code is more likely to exercise different compiler components than more uniform code; by stressing various compiler components, more bugs will be able to be uncovered. To test this, we investigated a baseline and two different ways to calculate the fitness function, with both being attempts to optimize the sampled codes diversity.As a baseline, our approach used what is called random search (RS), as it samples programs without any fitness function. We also used different measures of diversity realized through the fitness functions to apply two novel types of genetic algorithms (GAs): single-objective and many-objective functions for the single-objective diversity GA (SODGA) and many-objective diversity GA (MODGA), respectively. The details of these two genetic algorithms and the baseline are summarized in Table 3.Table 3: Approaches and their fitness-function details.The generated code was then compiled with both the original K1 compiler and the new K2 compiler released with Kotlin 2.0. This allowed us to focus on K1-to-K2 changes and find situations when the new compiler introduces regressions or unexpected behavior changes.Applying any approach listed in Table 3 results in finding interesting bugs, and the different approaches complement each other in terms of bug-detecting categories: random search (RS in Table 3) is effective for detecting more straightforward differential bugs such as out-of-memory errors and resolution ambiguity, whereas the two genetic algorithms (SODGA and MODGA in Table 3) are successful in detecting nuanced problems such as with conflicting overloads, shown in the code example below.fun main() {fun p(): Char { return 'c' }fun p(): Float { return 13.0f }}The intended behavior of the above code block is that the compiler reports a conflicting overloads error, as the two p functions should cause a resolution conflict. The K1 compiler returns the appropriate error, while the K2 compiles the code without any warning. By applying two types of genetic algorithms, we were able to uncover several independent instances of this bug, and we fixed this error in Kotlin 2.0.Overall, our teams complex approach to Kotlin compiler fuzzing has led to the discovery of previously unknown bugs. In combining both generative and mutation-based fuzzing, we have been able to enhance bug discovery.The future of fuzzingFuzzing has already enabled our team to find and fix many compiler bugs, improving the Kotlin compiler. Our research collaboration with TU Delfts SERG team produced a more advanced approach to fuzzing, combining generative and mutation-based methods, and with this foundation we can fine tune fuzzing even more.Fuzzing is an active field of research with many opportunities for advancement, and fuzzing a complex, industrial language like Kotlin presents unique challenges that require careful attention for the advancement of fuzzing, as well as for the results to be useful. These challenges and their possible solutions are listed in Table 4.ChallengePossible solutionMaintaining code validity, with code that is both syntactically correct and semantically meaningful. Often requires fuzzer implementation to include parts tailored to target language.Custom handwritten generators for more complicated parts of KotlinFinding different types of bugs and thoroughly testing all compiler parts to ensure good compiler code coverage.Coverage-guided fuzzing: one component of the fitness function is X if a code snippet reaches so far unexplored compiler partsAfter finding bugs, localizing faults by determining the exact cause and the code responsible. Fuzzers often produce large amounts of test cases, which require manual analysis. MOGDA, as it naturally prefers generating smaller and easier-to-understand programsA good fuzzer needs to be able to avoid duplicates and instead trigger new, unique bugs, instead of the same bugs repeatedly.For now, this problem is solved manually, but there are different possibilities to do this automaticallyTable 4: Fuzzing challenges and possible solutions.Our Kotlin compiler team continues to address these challenges by improving fuzzing capabilities in an incremental fashion. We want to keep on providing you with reliable software, and that includes reducing distractions so that you can focus on having fun while developing in Kotlin!More fuzzing resourcesCurious to explore the research or try out fuzzing on your own Kotlin code? Check out these resources!Our paper in collaboration with TU Delfts SERG teamA post about input reduction in Kotlin compiler fuzzingThe Fuzzing Book, a textbook available online with lots of detailed information and great examplesFuzz Testing: A Beginners Guide, an article with more about different types of fuzzing and how to try it yourselfA Survey of Compiler Testing, another academic article0 Comments 0 Shares 108 Views