Apples new AI-powered transcription outperforms OpenAIs Whisper

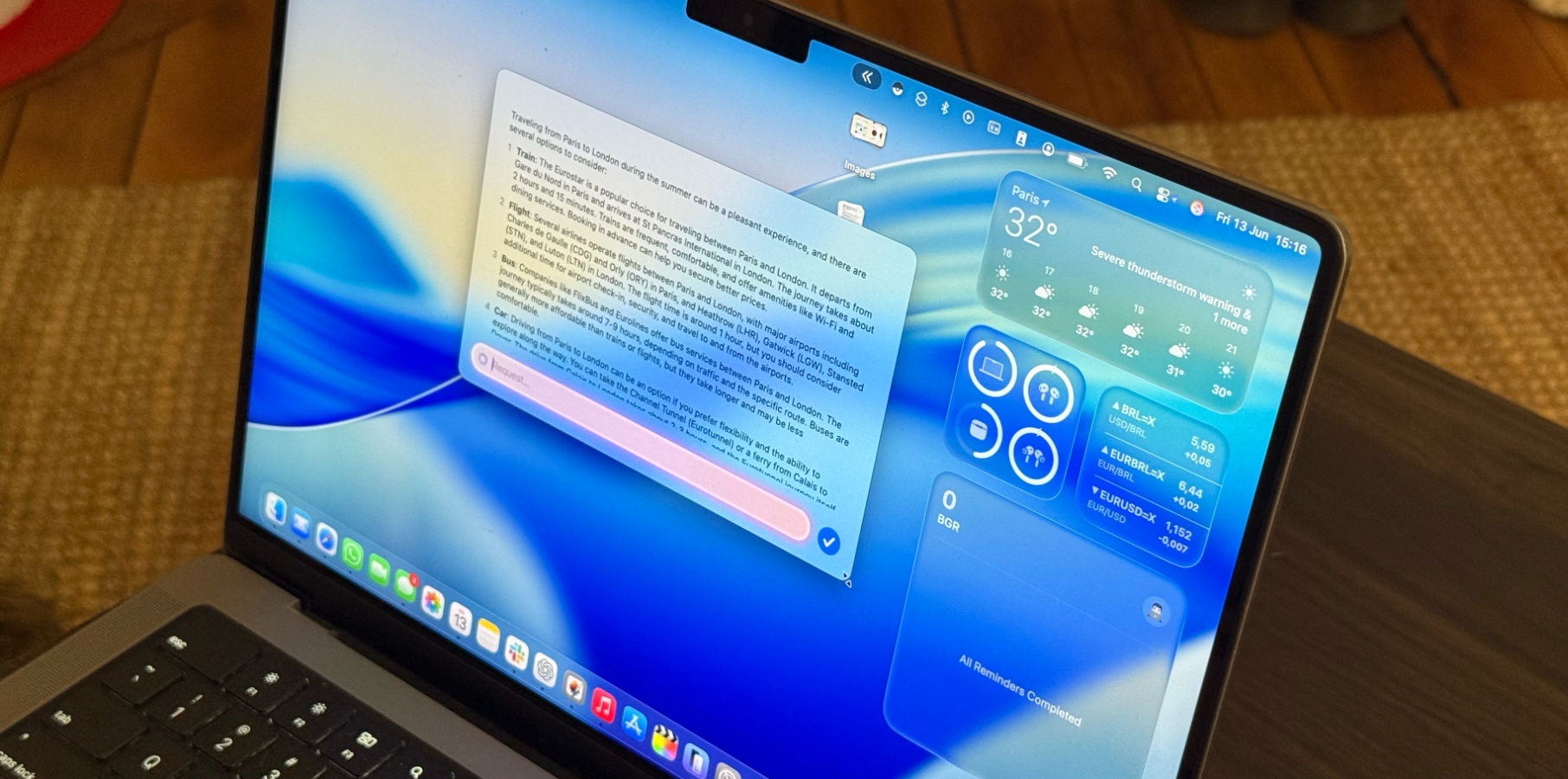

It appears Apple has several under-the-hood AI improvements in the works for iOS 26 and macOS Tahoe. While most of the features are building on what's already available, the company will also offer a chatbot-like experience for those who'd like to talk to Apple Intelligence privately through the Shortcuts app, and it has a very good speech API that outpaces OpenAI's Whisper.At least, that's what MacStories' John Voorhees claims in his hands-on report. He asked his son to build Yap, a "simple command-line utility that takes audio and video files as input and outputs SRT- and TXT-formatted transcripts."In his tests, he was able to transcribe a 7GB 4K video version of a 34-minute-long AppStories podcast episode in only 45 seconds and generate an SRT file. After doing the same with other AI transcription models, Apple's outperformed all of them:Yap: 45 seconds.MacWhisper (Large V3 Turbo): 1 minute and 41 seconds.VidCap: 1 minute and 55 seconds.MacWhisper (Large V2) 3 minutes and 55 seconds.While Apple's AI transcription model isn't flawless, and it still had trouble with last names and words like "AppStories," Voorhees was impressed by Yap's speed, being 55% faster than OpenAI's best model while achieving the same transcription quality.That said, once iOS 26 and macOS Tahoe are released, you'll probably see new apps taking advantage of Apple's latest AI models to analyze speech and transcribe data. Since these models are free for developers to use, they will improve the market for audio transcription.Currently, these features are limited to developers running the beta versions of iOS 26, macOS Tahoe, and Xcode 26.Don't Miss: How to transform Apple Intelligence into a real AI chatbot without ChatGPTThe post Apples new AI-powered transcription outperforms OpenAIs Whisper appeared first on BGR.