ChatGPT is getting a health upgrade, this time for users themselves.

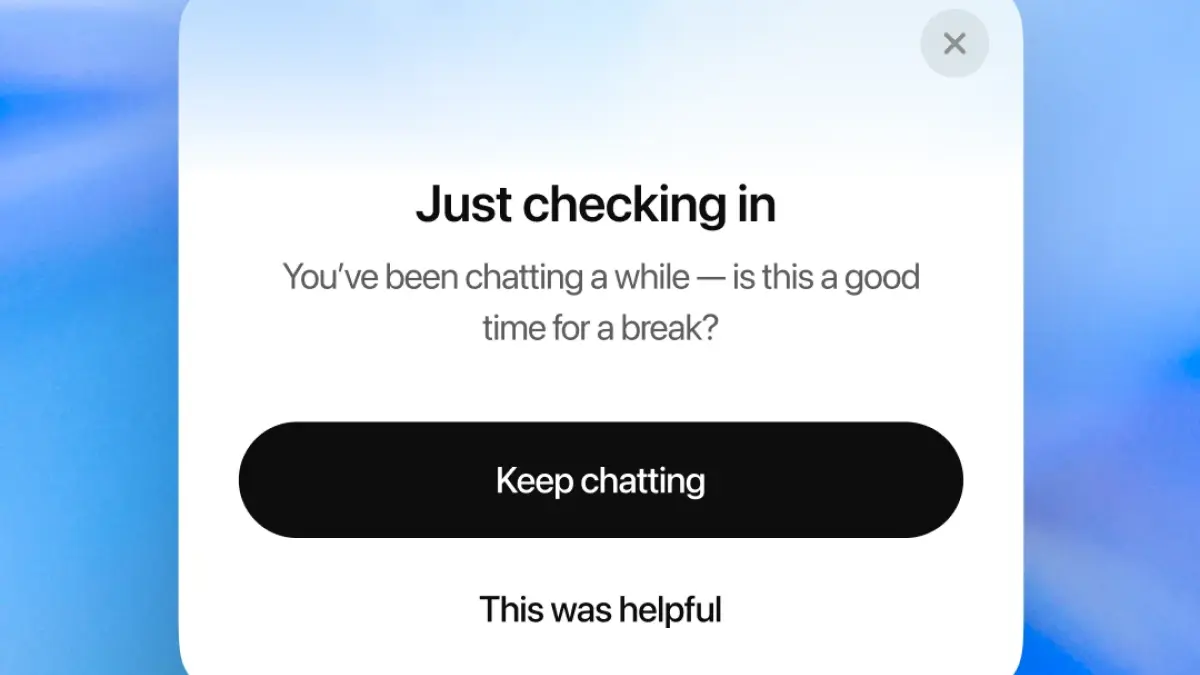

In a new blog post ahead of the company's reported GPT-5 announcement, OpenAI unveiled it would be refreshing its generative AI chatbot with new features designed to foster healthier, more stable relationships between user and bot. Users who have spent prolonged periods of time in a single conversation, for example, will now be prompted to log off with a gentle nudge. The company is also doubling down on fixes to the bot's sycophancy problem, and building out its models to recognize mental and emotional distress.

ChatGPT will respond differently to more "high stakes" personal questions, the company explains, guiding users through careful decision-making, weighing pros and cons, and responding to feedback rather than providing answers to potentially life-changing queries. This mirror's OpenAI's recently announced Study Mode for ChatGPT, which scraps the AI assistant's direct, lengthy responses in favor of guided Socratic lessons intended to encourage greater critical thinking.

Mashable Light Speed

"We don’t always get it right. Earlier this year, an update made the model too agreeable, sometimes saying what sounded nice instead of what was actually helpful. We rolled it back, changed how we use feedback, and are improving how we measure real-world usefulness over the long term, not just whether you liked the answer in the moment," OpenAI wrote in the announcement. "We also know that AI can feel more responsive and personal than prior technologies, especially for vulnerable individuals experiencing mental or emotional distress."

Broadly, OpenAI has been updating its models in response to claims that its generative AI products, specifically ChatGPT, are exacerbating unhealthy social relationships and worsening mental illnesses, especially among teenagers. Earlier this year, reports surfaced that many users were forming delusional relationships with the AI assistant, worsening existing psychiatric disorders, including paranoia and derealization. Lawmakers, in response, have shifted their focus to more intensely regulate chatbot use, as well as their advertisement as emotional partners or replacements for therapy.

OpenAI has recognized this criticism, acknowledging that its previous 4o model "fell short" in addressing concerning behavior from users. The company hopes that these new features and system prompts may step up to do the work its previous versions failed at.

"Our goal isn’t to hold your attention, but to help you use it well," the company writes. "We hold ourselves to one test: if someone we love turned to ChatGPT for support, would we feel reassured? Getting to an unequivocal 'yes' is our work."